I have a simple blog. It dates back years. Most things are about technology but I also have a popular blog post about finding song by lyrics which gets the lion share of the traffic.

I have implemented my own analytics of incoming traffic:

- Every request that comes to the backend server gets logged in PostgreSQL

- When you view any page, an async XHR request is made and that's also logged in PostgreSQL

Most traffic terminates at the CDN. Most likely, when you're reading this page right now it never renders on my server but is served straight from the CDN, but it will send an XHR request to my analytics backend, which in a sense becomes a measure that you're in a real regular browser that supports JavaScript.

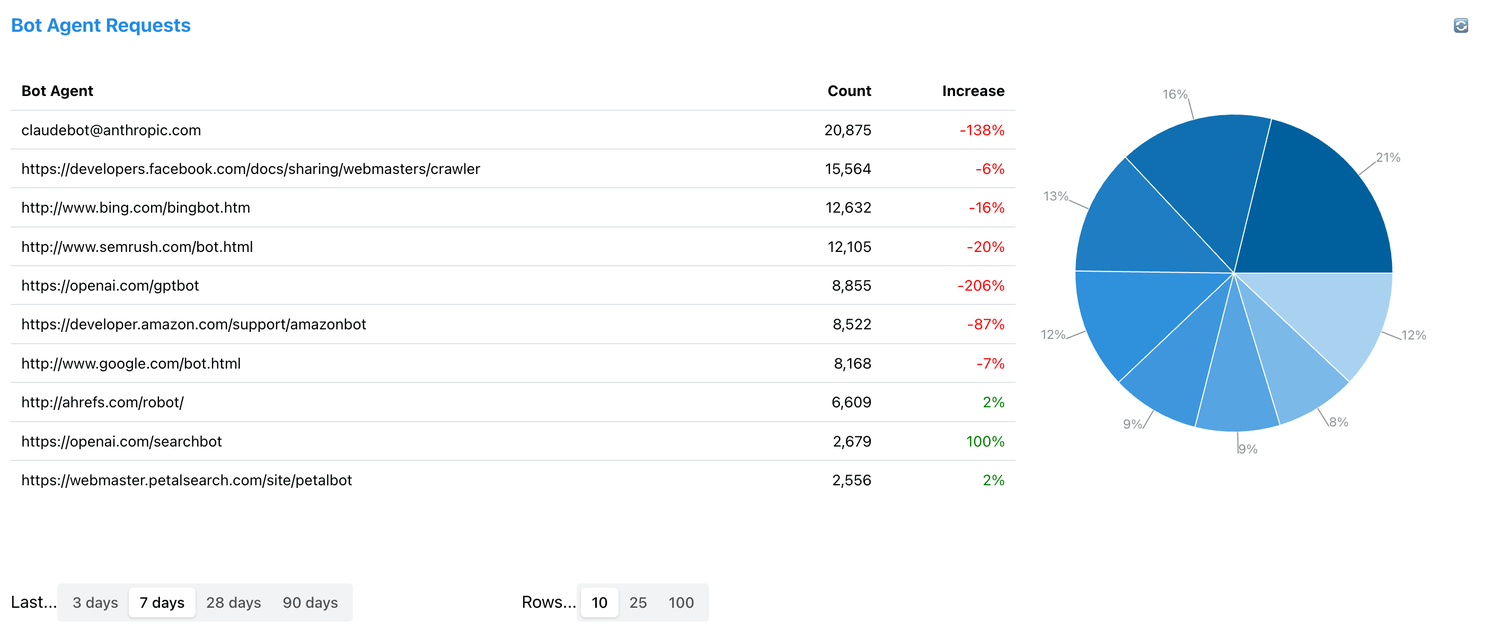

One thing I noticed is that the request User-Agent of the incoming requests that come in, appear to be some sort of bot that is not Googlebot, which used to dominate the traffic on my blog.

Notables:

- Claude's bot makes a ton of traffic!

- OpenAI appears to have two bots ("gptbot" and "searchbot") and it's large

- What on earth is that Facebook crawler doing? Is it crawling for training Meta's LLMs?

- What is this Amazonbot and why is it making as much traffic as Googlebot?

JavaScript or not

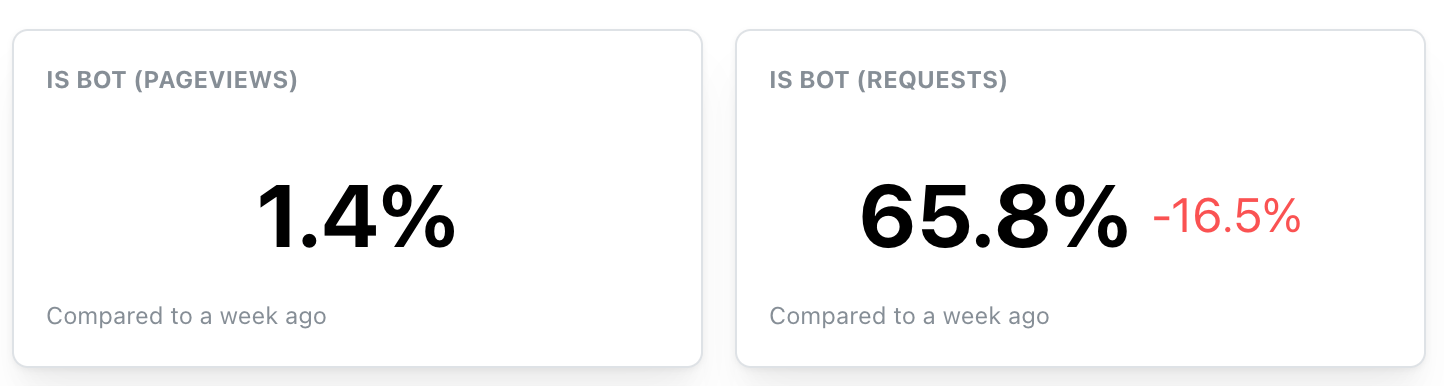

At the time of writing this, I had only recently started tracking the User-Agent of pageviews so I can't compare historical numbers. But generally it seems only ~1% of pageviews is by a bot user agent, whereas direct server-side traffic to the server, ~66% is from a bot agent.

That means that a lot of the bots don't render the page with JavaScript. Or rather, perhaps they do but they have some provision in there so as to not trigger XHR requests to my analytics (which is implemented with sendBeacon).

The reason for the "-16.5%" drop was because I recently implemented a fix to redirect traffic that bypassed the CDN and went straight to the backend.

Comments